apache atlas vs hive metastore

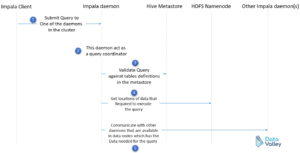

And thats where Metadata Propagator comes in: whenever a new PR is approved, a script runs in Drone (our CI/CD orchestrator), sends that documentation to a AWS S3 bucket, and calls an endpoint in Metadata Propagator, which creates events to update the documentation. CLI/edge nodes) or in the HiveServer2 server.  Another major use case is capturing these tables on Atlas in real-time provides the real-time insights into the technical metadata through Atlas to our customers. Heres a quick summary of everything weve discussed so far: Next, lets look at how their features differ from each other. However, this doesnt limit you to using Apache Atlas as you can connect any of your sources to a Hive metastore and use that to ingest metadata into Apache Atlas. Where developers & technologists share private knowledge with coworkers, Reach developers & technologists worldwide. What is the difference between Apache atlas and Apache falcon? In a state with the common law definition of theft, can you force a store to take cash by "pretending" to steal? Even with this approach, we faced two significant challenges: Given these challenges, we decided to deploy a listener on hive metastore server, so that we can capture any DDL changes on the server side. -metadata of Falcon dataflows is actually sinked to Atlas through Kafka topics so Atlas knows about Falcon metadata too and Atlas can include Falcon processes and its resulting meta objects (tables, hdfs folders, flows) into its lineage graphs.

Another major use case is capturing these tables on Atlas in real-time provides the real-time insights into the technical metadata through Atlas to our customers. Heres a quick summary of everything weve discussed so far: Next, lets look at how their features differ from each other. However, this doesnt limit you to using Apache Atlas as you can connect any of your sources to a Hive metastore and use that to ingest metadata into Apache Atlas. Where developers & technologists share private knowledge with coworkers, Reach developers & technologists worldwide. What is the difference between Apache atlas and Apache falcon? In a state with the common law definition of theft, can you force a store to take cash by "pretending" to steal? Even with this approach, we faced two significant challenges: Given these challenges, we decided to deploy a listener on hive metastore server, so that we can capture any DDL changes on the server side. -metadata of Falcon dataflows is actually sinked to Atlas through Kafka topics so Atlas knows about Falcon metadata too and Atlas can include Falcon processes and its resulting meta objects (tables, hdfs folders, flows) into its lineage graphs.  The next challenge was how we should handle the incremental loads. The default user name is admin and password is admin. Phase 1: Technical feasibility and onboard hive/sparkSQL/Teradata datasets to Atlas, Phase 3: Build tools on top of Atlas for creating/consuming the metadata, Phase 4: Enable Role-Based Access control on the platform. Both Solr and HBase are installed on the persistent Amazon EMR cluster as part of the Atlas installation. This way, the customers can do tagging, and we can enforce role-based access controls on these table without any delays. Created You can deploy these hooks on the gateways nodes (a.k.a. Measurable and meaningful skill levels for developers, San Francisco? Now when you choose Lineage, you should see the lineage of the table. This language has simple constructs that help users navigate Atlas data repositories. Another major problem is that we are dealing with unstructured, semi-structured, and various other types of data. If you havent read it, make sure to take a look! As shown following, Atlas shows the existence of column location_id in both of the tables created previously: As shown following, Atlas shows the total number of tables. Announcing the Stacks Editor Beta release!

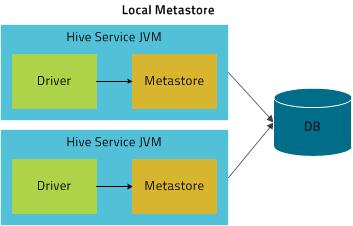

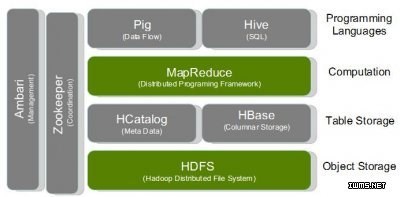

The next challenge was how we should handle the incremental loads. The default user name is admin and password is admin. Phase 1: Technical feasibility and onboard hive/sparkSQL/Teradata datasets to Atlas, Phase 3: Build tools on top of Atlas for creating/consuming the metadata, Phase 4: Enable Role-Based Access control on the platform. Both Solr and HBase are installed on the persistent Amazon EMR cluster as part of the Atlas installation. This way, the customers can do tagging, and we can enforce role-based access controls on these table without any delays. Created You can deploy these hooks on the gateways nodes (a.k.a. Measurable and meaningful skill levels for developers, San Francisco? Now when you choose Lineage, you should see the lineage of the table. This language has simple constructs that help users navigate Atlas data repositories. Another major problem is that we are dealing with unstructured, semi-structured, and various other types of data. If you havent read it, make sure to take a look! As shown following, Atlas shows the existence of column location_id in both of the tables created previously: As shown following, Atlas shows the total number of tables. Announcing the Stacks Editor Beta release!  So if you install both Hive and Atlas, there will be two kinds of metadata, and this will be stored in the mentioned spots. The AWS Glue Data Catalog provides a unified metadata repository across a variety of data sources and data formats.

So if you install both Hive and Atlas, there will be two kinds of metadata, and this will be stored in the mentioned spots. The AWS Glue Data Catalog provides a unified metadata repository across a variety of data sources and data formats.  The default login details are username admin and password admin. Maybe that is where your confusion comes from. You can use this setup to dynamically classify data and view the lineage of data as it moves through various processes. AWS Glue Data Catalog integrates with Amazon EMR, and also Amazon RDS, Amazon Redshift, Redshift Spectrum, and Amazon Athena. How does metadata ingestion work in Amundsen and Atlas? To ensure security and privacy of the data and access control. Andrew Park is a cloud infrastructure architect at AWS. To help to ensure the quality of the data. In a way Falcon is a much improved Oozie. How can I get an AnyDice conditional to convert a sequence to a boolean? We wanted to make sure our data governance solution is always consistent with what is available on the cluster. Created However, both metadata tools adopt different approaches to metadata ingestion. However, there are blog posts from the community that can provide insights into how data teams are using Apache Atlas and other metadata catalog tools. Progressive Elaboration (or, a box full of bees), Get more value out of your application logs in Stackdriver, Why you should govern data access through Purpose-Based Access Control, MetadataMeet Big Datas Little Brother. To demonstrate the functionality of Apache Atlas, we do the following in this post: The steps following guide you through the installation of Atlas on Amazon EMR by using the AWS CLI. When developing this architecture, we wanted a simple and easy to maintain solution without tightly coupling all of our tools to Atlas. This architecture provides a lot of flexibility. After successfully creating an SSH tunnel, use following URL to access the Apache Atlas UI. To set up a web interface for Hue, follow the steps in the Amazon EMR documentation. Connect and share knowledge within a single location that is structured and easy to search. Create a new lookup external table called, Choose Classification from the left pane, and choose the +, Choose the classification that you created (. Using Hive metastore for client application performance, New to Titan db, help installing titan db, Titan With Cassandra as Backend : creating , storing and traversing the graph in java, import metadata from RDBMS into Apache Atlas. Data governance is a vast topic, and in this prototype, we are concentrating only on how to set/view tags on the file system. How does metadata ingestion work in Amundsen? As we said in our previous article, we have an older data catalog that resides on spreadsheets and was manually populated, and now were replacing it for Apache Atlas. However, according some docs of Hive, it shows that the metadata will be stored in RDBMS such as MySql. Isnt it easy? This architecture allows us to decouple the propagation logic from our Airflow DAGs and other scripts, and allows us to easily compose different actions whenever theres an input event. User management for self-managed We then evaluated Apache Atlas and found that we can leverage it for building the data tagging capabilities and as a metadata store. Provide a centralized platform for all Hadoop and Teradata customers to generate and consume the technical metadata.

The default login details are username admin and password admin. Maybe that is where your confusion comes from. You can use this setup to dynamically classify data and view the lineage of data as it moves through various processes. AWS Glue Data Catalog integrates with Amazon EMR, and also Amazon RDS, Amazon Redshift, Redshift Spectrum, and Amazon Athena. How does metadata ingestion work in Amundsen and Atlas? To ensure security and privacy of the data and access control. Andrew Park is a cloud infrastructure architect at AWS. To help to ensure the quality of the data. In a way Falcon is a much improved Oozie. How can I get an AnyDice conditional to convert a sequence to a boolean? We wanted to make sure our data governance solution is always consistent with what is available on the cluster. Created However, both metadata tools adopt different approaches to metadata ingestion. However, there are blog posts from the community that can provide insights into how data teams are using Apache Atlas and other metadata catalog tools. Progressive Elaboration (or, a box full of bees), Get more value out of your application logs in Stackdriver, Why you should govern data access through Purpose-Based Access Control, MetadataMeet Big Datas Little Brother. To demonstrate the functionality of Apache Atlas, we do the following in this post: The steps following guide you through the installation of Atlas on Amazon EMR by using the AWS CLI. When developing this architecture, we wanted a simple and easy to maintain solution without tightly coupling all of our tools to Atlas. This architecture provides a lot of flexibility. After successfully creating an SSH tunnel, use following URL to access the Apache Atlas UI. To set up a web interface for Hue, follow the steps in the Amazon EMR documentation. Connect and share knowledge within a single location that is structured and easy to search. Create a new lookup external table called, Choose Classification from the left pane, and choose the +, Choose the classification that you created (. Using Hive metastore for client application performance, New to Titan db, help installing titan db, Titan With Cassandra as Backend : creating , storing and traversing the graph in java, import metadata from RDBMS into Apache Atlas. Data governance is a vast topic, and in this prototype, we are concentrating only on how to set/view tags on the file system. How does metadata ingestion work in Amundsen? As we said in our previous article, we have an older data catalog that resides on spreadsheets and was manually populated, and now were replacing it for Apache Atlas. However, according some docs of Hive, it shows that the metadata will be stored in RDBMS such as MySql. Isnt it easy? This architecture allows us to decouple the propagation logic from our Airflow DAGs and other scripts, and allows us to easily compose different actions whenever theres an input event. User management for self-managed We then evaluated Apache Atlas and found that we can leverage it for building the data tagging capabilities and as a metadata store. Provide a centralized platform for all Hadoop and Teradata customers to generate and consume the technical metadata.

Amundsen boosted the productivity of data practitioners at Lyft by 20%. How is metadata ingestion in Apache Atlas different from Amundsen? Internally, it was implemented using AIOHTTP, which enables it to perform well under heavy loads and allows us to process requests asynchronously. To make things easier, weve summarized everything about Amundsen and Atlas with a feature matrix. In this post, we outline the steps required to install and configure an Amazon EMR cluster with Apache Atlas by using the AWS CLI or CloudFormation. 11-25-2016 Atlas already provides hive hooks for capturing the data definition language (DDL). The metastore listener listens for table create/change/drop events and sends this change to Atlas via message bus (Kafka). Set up the metastore listener to be aware of the messaging bus (Kafka) by adding Kafka info in the atlas-application Properties file in the same config directory where hive-site.xml resides: The metastore listener code consists of a class called AtlasMhook that extends the MetaStoreEventListener and classes for each event. You have a working local copy of the AWS CLI package configured, with access and secret keys. Why did the Federal reserve balance sheet capital drop by 32% in Dec 2015? As shown following, the lineage provides information about its base tables and is an intersect table of two tables.

Amundsen boosted the productivity of data practitioners at Lyft by 20%. How is metadata ingestion in Apache Atlas different from Amundsen? Internally, it was implemented using AIOHTTP, which enables it to perform well under heavy loads and allows us to process requests asynchronously. To make things easier, weve summarized everything about Amundsen and Atlas with a feature matrix. In this post, we outline the steps required to install and configure an Amazon EMR cluster with Apache Atlas by using the AWS CLI or CloudFormation. 11-25-2016 Atlas already provides hive hooks for capturing the data definition language (DDL). The metastore listener listens for table create/change/drop events and sends this change to Atlas via message bus (Kafka). Set up the metastore listener to be aware of the messaging bus (Kafka) by adding Kafka info in the atlas-application Properties file in the same config directory where hive-site.xml resides: The metastore listener code consists of a class called AtlasMhook that extends the MetaStoreEventListener and classes for each event. You have a working local copy of the AWS CLI package configured, with access and secret keys. Why did the Federal reserve balance sheet capital drop by 32% in Dec 2015? As shown following, the lineage provides information about its base tables and is an intersect table of two tables.  Our first priority with Atlas was to catalog information about different kinds of metadata from a few sources: Table schemas from Hive metastore, which are generated whenever tables are created or updated in the lake house; Data lineage, which is defined by the transformations applied in the ETL pipelines; Data classification, such as what informations are sensible or PII; and documentation of tables and categories, which were being written by data analysts and engineers in spreadsheets. Here i am confused that where these above tool will fit in my use case(general questionj)?. Join over 5k data leaders from companies like Amazon, Apple, and Spotify and knowledge.

Our first priority with Atlas was to catalog information about different kinds of metadata from a few sources: Table schemas from Hive metastore, which are generated whenever tables are created or updated in the lake house; Data lineage, which is defined by the transformations applied in the ETL pipelines; Data classification, such as what informations are sensible or PII; and documentation of tables and categories, which were being written by data analysts and engineers in spreadsheets. Here i am confused that where these above tool will fit in my use case(general questionj)?. Join over 5k data leaders from companies like Amazon, Apple, and Spotify and knowledge.

This blog post was last reviewed and updated April, 2022. After creating the Hue superuser, you can use the Hue console to run hive queries. Asking for help, clarification, or responding to other answers. 5. Assessing the data discovery, lineage, and governance features. At first login, you are asked to create a Hue superuser, as shown following. Next, view all the entities belonging to this classification. environments.

This blog post was last reviewed and updated April, 2022. After creating the Hue superuser, you can use the Hue console to run hive queries. Asking for help, clarification, or responding to other answers. 5. Assessing the data discovery, lineage, and governance features. At first login, you are asked to create a Hue superuser, as shown following. Next, view all the entities belonging to this classification. environments. Among all the features that Apache Atlas offers, the core feature of our interest in this post is the Apache Hive metadata management and data lineage. Nikita Jaggi is a senior big data consultant with AWS. Amundsen Vs Atlas: Key factors of comparison. You also might have to add an inbound rule for SSH (port 22) to the masters security group. On the left pane of the Atlas UI, ensure Search is selected, and enter the following information in the two fields listed following: The output of the preceding query should look like this: To view the lineage of the created tables, you can use the Atlas web search. Then, whenever those pipelines run, they send a request to Metadata Propagator, creating events to update table lineage and tags. To avoid unnecessary charges, you should remove your Amazon EMR cluster after youre done experimenting with it. To capture the metadata of datasets for security and end-user data consumption purposes.

With Atlas you can really apply governance by collecting all metadata querying and tagging it and Falcon can maybe execute processes that evolve around that by moving data from one place to another (and yes, Falcon moving a dataset from an analysis cluster to an archiving cluster is also about data governance/management), Find answers, ask questions, and share your expertise. Why is the comparative of "sacer" not attested? Having been a Linux solutions engineer for a long time, Andrew loves deep dives into Linux-related challenges.

With Atlas you can really apply governance by collecting all metadata querying and tagging it and Falcon can maybe execute processes that evolve around that by moving data from one place to another (and yes, Falcon moving a dataset from an analysis cluster to an archiving cluster is also about data governance/management), Find answers, ask questions, and share your expertise. Why is the comparative of "sacer" not attested? Having been a Linux solutions engineer for a long time, Andrew loves deep dives into Linux-related challenges.  From there you can create tag based policies from Ranger to manage access to anything 'PII' tagged in Atlas. Get the latest eBay Tech Blog posts via RSS and Twitter, GraphLoad: A Framework to Load and Update Over Ten-Billion-Vertex Graphs with Performance and Consistency, eBay Event Notification Platform: Listener SDKs, eBay Connect 2021: How Our Newest APIs Are Enhancing Customer Experiences, Two Years Later: APIs are the Destination, Adapting Continuous Integration and Delivery to Hardware Quality. While Amundsen uses neo4j for its database metadata, Apache Atlas relies on JanusGraph. You can classify columns and databases in a similar manner. Multiple data clusters (HDP 2.4.2, Hive 1.2, Spark 2.1.0) Atlas cluster (HDP 2.6, Atlas 1.0.0 alpha). If you want to be part of an innovative team and contribute to top-notch projects like this one, check out our open roles! Here are some step-by-step setup guides to help you deploy these tools: Amundsen has regular updates and has a large community supporting the project. The metadata is stored in HBase database.The HBase database is maintained by Apache Atlas itself.

From there you can create tag based policies from Ranger to manage access to anything 'PII' tagged in Atlas. Get the latest eBay Tech Blog posts via RSS and Twitter, GraphLoad: A Framework to Load and Update Over Ten-Billion-Vertex Graphs with Performance and Consistency, eBay Event Notification Platform: Listener SDKs, eBay Connect 2021: How Our Newest APIs Are Enhancing Customer Experiences, Two Years Later: APIs are the Destination, Adapting Continuous Integration and Delivery to Hardware Quality. While Amundsen uses neo4j for its database metadata, Apache Atlas relies on JanusGraph. You can classify columns and databases in a similar manner. Multiple data clusters (HDP 2.4.2, Hive 1.2, Spark 2.1.0) Atlas cluster (HDP 2.6, Atlas 1.0.0 alpha). If you want to be part of an innovative team and contribute to top-notch projects like this one, check out our open roles! Here are some step-by-step setup guides to help you deploy these tools: Amundsen has regular updates and has a large community supporting the project. The metadata is stored in HBase database.The HBase database is maintained by Apache Atlas itself. A successful import looks like the following: After a successful Hive import, you can return to the Atlas Web UI to search the Hive database or the tables that were imported. The script asks for your user name and password for Atlas. At eBay, the hive metastore listener is helping us in two ways: Tags: non-technical users. On the other hand, Apache Atlas has a public Jira project, but without a clearly defined roadmap. Finally, Metadata Propagator reads the YAML files and updates the definitions in Atlas.

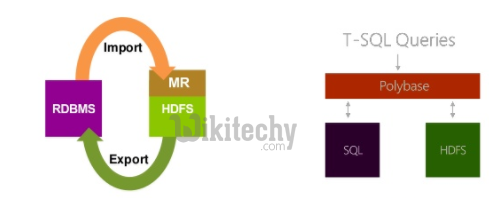

The automation shell script assumes the following: On successful execution of the command, output containing a cluster ID is displayed: Use the following command to list the names of active clusters (your cluster shows on the list after it is ready): In the output of the previous command, look for the server name EMR-Atlas (unless you changed the default name in the script). Near real-time metadata sync between the source and destination through the metastore listener and clusters enhanced our developer productivity a lot, since they dont need to wait for the batch sync-up to happen between these clusters. For example, it can receive a request to create a table documentation, or we can push the same event directly to a Kafka topic. This installation creates an Amazon EMR cluster with Hadoop, HBase, Hive, and Zookeeper. This allows us to tag source columns as PII or sensitive information and all derived columns in the data lake will be correctly classified. To learn more, see our tips on writing great answers. Another thing that is on our radar for the future is automating the definition of data lineage and tags. Requests made to the API create events in Kafka, which acts both as an internal queue of tasks to process and as an interface for push-based systems. On each DDL event (create/alter/drop), retrieve the current Table object and instantiate the corresponding event class accordingly: Use a similar framework for the alter and drop table events. How to run a crontab job only if a file exists? You should see a screen like that shown following. Apache Atlas relies on out-of-the-box integration with metadata sources from the Hadoop ecosystem projects like Hive, Sqoop, Storm, and so on. Amundsen, Lyfts data discovery and metadata platform was announced in April 2019 and open-sourced later in the same year. We want to converge these local data governances into one single platform and provide a holistic view of the entire platform. What are the differences in the underlying architecture? Initially, we were scanning the tables and databases on the source clusters, identifying the missing tables/databases, and then recreating the tables on the destination cluster. Highly scalable, massively reliable, and always on. I have installed Atlas, Hive and Hadoop and configured them correctly. As the eBay analytics data platform team, we want to have the following capabilities on the platform level for all data existing on our Hadoop and Teradata clusters. A hook registers to listen to any metadata updates and CRUD operations at the source and then, publishes changes using Kafka messages. It is not that clear what Data Governance actually is. Lets check some use cases where Metadata Propagator is being used: Our old data catalog was a spreadsheet in Google Sheets where we described what each table, column and data category represented. Amazon EMR is a managed service that simplifies the implementation of big data frameworks such as Apache Hadoop and Spark. 01:54 PM. In this new format, we could use CI/CD orchestration for schema validations and also publish those documentation changes to other systems. Next, you can search Atlas for entities using the Atlas domain-specific language (DSL), which is a SQL-like query language. Big Data, Data Infrastructure and Services, Hadoop, OSS. Apache Atlas metadata architecture. A sample configuration file for the Hive service to reference an external RDS Hive metastore can be found in the Amazon EMR documentation. Discover metadata using the Atlas domain-specific language (DSL). If we want to also push data to any other visualization tool, such as Looker, we just need to add another consumer that outputs data to it. So you can say that column B in Hive table Y holds sensitive data by assigning a 'PII' tag to it. Synchronize hive metadata and Atlas repo with hive metastore event listener: Hive data and Atlas reside in separate clusters in which Atlas functions as a repo for several Hive data clusters. What happened after the first video conference between Jason and Sarris? In our previous article, we discussed the first steps of our Data Governance team, how we chose Apache Atlas as our governance tool, and how we needed to create a new service to propagate metadata from different systems to Atlas. For example, to see the lineage of the intersect table trip_details_by_zone created earlier, enter the following information: Now choose the table name trip_details_by_zone to view the details of the table as shown following. Amundsen is known for its involved and buzzing community - with over 37 organizations officially using it and 100+ contributors. If a CLI or edge node misses the hook, this will cause inconsistency in the table metadata on the cluster and the Atlas side. In our environment, we have a requirement to keep some of the tables and databases in sync between some clusters. projects, weve codified our learnings on what Atlas and Falcon serve very different purposes, but there are some areas where they touch base. Ltd. |Privacy Policy & Terms of UseLicense AgreementData Processing Agreement. In this article, well dive more deeply into our data architecture, what are our use cases for Apache Atlas, and what solutions we developed to make everything work. Before proceeding, wait until the CloudFormation stack events show that the status of the stack has reached CREATE_COMPLETE. 2022 Atlan Pte. For metadata to be imported in Atlas, the Atlas Hive import tool is only available by using the command line on the Amazon EMR server (theres no web UI.)

The automation shell script assumes the following: On successful execution of the command, output containing a cluster ID is displayed: Use the following command to list the names of active clusters (your cluster shows on the list after it is ready): In the output of the previous command, look for the server name EMR-Atlas (unless you changed the default name in the script). Near real-time metadata sync between the source and destination through the metastore listener and clusters enhanced our developer productivity a lot, since they dont need to wait for the batch sync-up to happen between these clusters. For example, it can receive a request to create a table documentation, or we can push the same event directly to a Kafka topic. This installation creates an Amazon EMR cluster with Hadoop, HBase, Hive, and Zookeeper. This allows us to tag source columns as PII or sensitive information and all derived columns in the data lake will be correctly classified. To learn more, see our tips on writing great answers. Another thing that is on our radar for the future is automating the definition of data lineage and tags. Requests made to the API create events in Kafka, which acts both as an internal queue of tasks to process and as an interface for push-based systems. On each DDL event (create/alter/drop), retrieve the current Table object and instantiate the corresponding event class accordingly: Use a similar framework for the alter and drop table events. How to run a crontab job only if a file exists? You should see a screen like that shown following. Apache Atlas relies on out-of-the-box integration with metadata sources from the Hadoop ecosystem projects like Hive, Sqoop, Storm, and so on. Amundsen, Lyfts data discovery and metadata platform was announced in April 2019 and open-sourced later in the same year. We want to converge these local data governances into one single platform and provide a holistic view of the entire platform. What are the differences in the underlying architecture? Initially, we were scanning the tables and databases on the source clusters, identifying the missing tables/databases, and then recreating the tables on the destination cluster. Highly scalable, massively reliable, and always on. I have installed Atlas, Hive and Hadoop and configured them correctly. As the eBay analytics data platform team, we want to have the following capabilities on the platform level for all data existing on our Hadoop and Teradata clusters. A hook registers to listen to any metadata updates and CRUD operations at the source and then, publishes changes using Kafka messages. It is not that clear what Data Governance actually is. Lets check some use cases where Metadata Propagator is being used: Our old data catalog was a spreadsheet in Google Sheets where we described what each table, column and data category represented. Amazon EMR is a managed service that simplifies the implementation of big data frameworks such as Apache Hadoop and Spark. 01:54 PM. In this new format, we could use CI/CD orchestration for schema validations and also publish those documentation changes to other systems. Next, you can search Atlas for entities using the Atlas domain-specific language (DSL), which is a SQL-like query language. Big Data, Data Infrastructure and Services, Hadoop, OSS. Apache Atlas metadata architecture. A sample configuration file for the Hive service to reference an external RDS Hive metastore can be found in the Amazon EMR documentation. Discover metadata using the Atlas domain-specific language (DSL). If we want to also push data to any other visualization tool, such as Looker, we just need to add another consumer that outputs data to it. So you can say that column B in Hive table Y holds sensitive data by assigning a 'PII' tag to it. Synchronize hive metadata and Atlas repo with hive metastore event listener: Hive data and Atlas reside in separate clusters in which Atlas functions as a repo for several Hive data clusters. What happened after the first video conference between Jason and Sarris? In our previous article, we discussed the first steps of our Data Governance team, how we chose Apache Atlas as our governance tool, and how we needed to create a new service to propagate metadata from different systems to Atlas. For example, to see the lineage of the intersect table trip_details_by_zone created earlier, enter the following information: Now choose the table name trip_details_by_zone to view the details of the table as shown following. Amundsen is known for its involved and buzzing community - with over 37 organizations officially using it and 100+ contributors. If a CLI or edge node misses the hook, this will cause inconsistency in the table metadata on the cluster and the Atlas side. In our environment, we have a requirement to keep some of the tables and databases in sync between some clusters. projects, weve codified our learnings on what Atlas and Falcon serve very different purposes, but there are some areas where they touch base. Ltd. |Privacy Policy & Terms of UseLicense AgreementData Processing Agreement. In this article, well dive more deeply into our data architecture, what are our use cases for Apache Atlas, and what solutions we developed to make everything work. Before proceeding, wait until the CloudFormation stack events show that the status of the stack has reached CREATE_COMPLETE. 2022 Atlan Pte. For metadata to be imported in Atlas, the Atlas Hive import tool is only available by using the command line on the Amazon EMR server (theres no web UI.)

Discover & explore all your data assets For more information about Amazon EMR or any other big data topics on AWS, see the EMR blog posts on the AWS Big Data blog. We have some dedicated clusters primarily running only sparkSQL workloads by connecting to hive metastore servers. -Another core feature is that you assign tags to all metadata entities on Atlas. Do not lose the superuser credentials. One big difference between traditional data governance and Hadoop big data governance is the sources of the data that are out of the platform team's control. In conventional data warehouses, we had everything under check, whether it was how much data came in or from where the data came. Also, when we move data from traditional data warehouses to the Hadoop world, a lot of metadata associated with the data sets gets dropped, making it hard for the data steward to manage all the data in the big data ecosystem. To read more about Atlas and its features, see the Atlas website.

Discover & explore all your data assets For more information about Amazon EMR or any other big data topics on AWS, see the EMR blog posts on the AWS Big Data blog. We have some dedicated clusters primarily running only sparkSQL workloads by connecting to hive metastore servers. -Another core feature is that you assign tags to all metadata entities on Atlas. Do not lose the superuser credentials. One big difference between traditional data governance and Hadoop big data governance is the sources of the data that are out of the platform team's control. In conventional data warehouses, we had everything under check, whether it was how much data came in or from where the data came. Also, when we move data from traditional data warehouses to the Hadoop world, a lot of metadata associated with the data sets gets dropped, making it hard for the data steward to manage all the data in the big data ecosystem. To read more about Atlas and its features, see the Atlas website.  Since we were planning to move everything to Atlas, but we also had a lot of users that were using the spreadsheet catalog daily, we decided to use a hybrid approach: migrating the documentation from Sheets to YAML files in a Github repo, which would be replicated to both spreadsheet catalog and Atlas whenever new files are merged. Visual querying & connections for

Since we were planning to move everything to Atlas, but we also had a lot of users that were using the spreadsheet catalog daily, we decided to use a hybrid approach: migrating the documentation from Sheets to YAML files in a Github repo, which would be replicated to both spreadsheet catalog and Atlas whenever new files are merged. Visual querying & connections for

- Masonite Floor Protection Sheets

- Turkey Manure For Sale Near Me

- Teku Terra Cotta Plastic

- Refrigerator Cabinet Surround Lowe's

- Padding Compound Alternative

apache atlas vs hive metastore