cookies that may not be particu

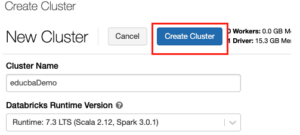

Any cookies that may not be particularly necessary for the website to function and is used specifically to collect user personal data via analytics, ads, other embedded contents are termed as non-necessary cookies. Yes. To securely access AWS resources without using AWS keys, you can launch Databricks clusters with instance profiles. To enable Photon acceleration, select the Use Photon Acceleration checkbox. The scope of the key is local to each cluster node and is destroyed along with the cluster node itself.  When you distribute your workload with Spark, all of the distributed processing happens on worker nodes. If a cluster has zero workers, you can run non-Spark commands on the driver node, but Spark commands will fail. Find centralized, trusted content and collaborate around the technologies you use most. A 150 GB encrypted EBS container root volume used by the Spark worker. But opting out of some of these cookies may affect your browsing experience. Cluster tags allow you to easily monitor the cost of cloud resources used by various groups in your organization. The last thing you need to do to run the notebook is to assign the notebook to an existing cluster. During cluster creation or edit, set: See Create and Edit in the Clusters API reference for examples of how to invoke these APIs. During its lifetime, the key resides in memory for encryption and decryption and is stored encrypted on the disk. create cluster databricks azure microsoft docs configure name Microsoft Learn: Azure Databricks. Apache, Apache Spark, Spark, and the Spark logo are trademarks of the Apache Software Foundation. cluster azure databricks create microsoft docs running indicates state The default cluster mode is Standard.

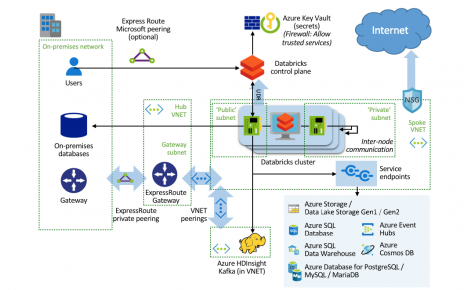

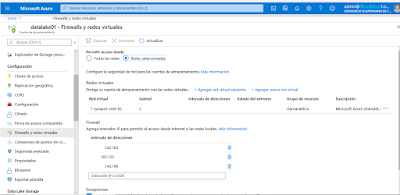

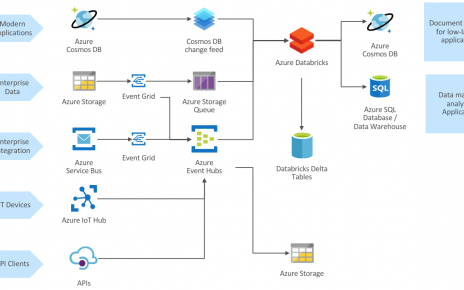

When you distribute your workload with Spark, all of the distributed processing happens on worker nodes. If a cluster has zero workers, you can run non-Spark commands on the driver node, but Spark commands will fail. Find centralized, trusted content and collaborate around the technologies you use most. A 150 GB encrypted EBS container root volume used by the Spark worker. But opting out of some of these cookies may affect your browsing experience. Cluster tags allow you to easily monitor the cost of cloud resources used by various groups in your organization. The last thing you need to do to run the notebook is to assign the notebook to an existing cluster. During cluster creation or edit, set: See Create and Edit in the Clusters API reference for examples of how to invoke these APIs. During its lifetime, the key resides in memory for encryption and decryption and is stored encrypted on the disk. create cluster databricks azure microsoft docs configure name Microsoft Learn: Azure Databricks. Apache, Apache Spark, Spark, and the Spark logo are trademarks of the Apache Software Foundation. cluster azure databricks create microsoft docs running indicates state The default cluster mode is Standard.  Create Azure Data Lake Storage Gen2 Storage Account, Mount ADSL Gen2 to Cluster using service principal and OAuth 2.0, Create Azure Databricks Cluster - Azure Data Lake Storage Credential Passthrough, Provide a static Public IP Address to On-Premises or Other Cloud Infrastructure Firewall, S2S VPN Between Databricks Cluster and RDS Services, Read data from Azure Data Lake Storage Gen2, Read data from Azure Synapse Analyst - SQL Pool, Write data to Azure Data Lake Storage Gen2, Write data to Power BI Streaming Datasets API, Set up Azure Private Link with Azure Databricks. This hosts Spark services and logs. To view or add a comment, sign in, Great post Sunil ! This applies especially to workloads whose requirements change over time (like exploring a dataset during the course of a day), but it can also apply to a one-time shorter workload whose provisioning requirements are unknown. The nodes primary private IP address is used to host Databricks internal traffic. Databricks offers several types of runtimes and several versions of those runtime types in the Databricks Runtime Version drop-down when you create or edit a cluster. To subscribe to this RSS feed, copy and paste this URL into your RSS reader. The default AWS capacity limit for these volumes is 20 TiB. azure databricks data event security level databricks clusters cli On the cluster details page, click the Spark Cluster UI - Master tab. In addition, only High Concurrency clusters support table access control. Why is the comparative of "sacer" not attested?

Create Azure Data Lake Storage Gen2 Storage Account, Mount ADSL Gen2 to Cluster using service principal and OAuth 2.0, Create Azure Databricks Cluster - Azure Data Lake Storage Credential Passthrough, Provide a static Public IP Address to On-Premises or Other Cloud Infrastructure Firewall, S2S VPN Between Databricks Cluster and RDS Services, Read data from Azure Data Lake Storage Gen2, Read data from Azure Synapse Analyst - SQL Pool, Write data to Azure Data Lake Storage Gen2, Write data to Power BI Streaming Datasets API, Set up Azure Private Link with Azure Databricks. This hosts Spark services and logs. To view or add a comment, sign in, Great post Sunil ! This applies especially to workloads whose requirements change over time (like exploring a dataset during the course of a day), but it can also apply to a one-time shorter workload whose provisioning requirements are unknown. The nodes primary private IP address is used to host Databricks internal traffic. Databricks offers several types of runtimes and several versions of those runtime types in the Databricks Runtime Version drop-down when you create or edit a cluster. To subscribe to this RSS feed, copy and paste this URL into your RSS reader. The default AWS capacity limit for these volumes is 20 TiB. azure databricks data event security level databricks clusters cli On the cluster details page, click the Spark Cluster UI - Master tab. In addition, only High Concurrency clusters support table access control. Why is the comparative of "sacer" not attested?  azure databricks

azure databricks  Databricks provisions EBS volumes for every worker node as follows: A 30 GB encrypted EBS instance root volume used only by the host operating system and Databricks internal services. You can select either gp2 or gp3 for your AWS EBS SSD volume type. By default, the max price is 100% of the on-demand price.

Databricks provisions EBS volumes for every worker node as follows: A 30 GB encrypted EBS instance root volume used only by the host operating system and Databricks internal services. You can select either gp2 or gp3 for your AWS EBS SSD volume type. By default, the max price is 100% of the on-demand price.  See Customer-managed keys for workspace storage. I have realized you are using a trial version, and I think the other answer is correct. Azure Databricks is a fully-managed version of the open-source Apache Spark analytics and data processing engine. Cluster create permission, you can select the Unrestricted policy and create fully-configurable clusters.

See Customer-managed keys for workspace storage. I have realized you are using a trial version, and I think the other answer is correct. Azure Databricks is a fully-managed version of the open-source Apache Spark analytics and data processing engine. Cluster create permission, you can select the Unrestricted policy and create fully-configurable clusters.

Add the following under Job > Configure Cluster > Spark > Init Scripts. Firstly, find Azure Databricks on the menu located on the left-hand side.

Add the following under Job > Configure Cluster > Spark > Init Scripts. Firstly, find Azure Databricks on the menu located on the left-hand side.  This category only includes cookies that ensures basic functionalities and security features of the website. The overall policy might become long, but it is easier to debug. databricks create cluster azure workspace

This category only includes cookies that ensures basic functionalities and security features of the website. The overall policy might become long, but it is easier to debug. databricks create cluster azure workspace  Lets add more code to our notebook. See AWS spot pricing. The Unrestricted policy does not limit any cluster attributes or attribute values. It focuses on creating and editing clusters using the UI. You can add custom tags when you create a cluster. High Concurrency clusters can run workloads developed in SQL, Python, and R. The performance and security of High Concurrency clusters is provided by running user code in separate processes, which is not possible in Scala. Databricks may store shuffle data or ephemeral data on these locally attached disks. Other users cannot attach to the cluster. Scales down based on a percentage of current nodes. Databricks uses Throughput Optimized HDD (st1) to extend the local storage of an instance. databricks delta azure apr updated automate creation tables loading This is referred to as autoscaling. If a pool does not have sufficient idle resources to create the requested driver or worker nodes, the pool expands by allocating new instances from the instance provider. databricks To avoid hitting this limit, administrators should request an increase in this limit based on their usage requirements. These cookies will be stored in your browser only with your consent. If you change the value associated with the key Name, the cluster can no longer be tracked by Databricks. In addition, on job clusters, Databricks applies two default tags: RunName and JobId. As an example, we will read a CSV file from the provided Website (URL): Pressing SHIFT+ENTER executes currently edited cell (command). Lets start with the Azure portal. The following link refers to a problem like the one you are facing. That means you can use a different language for each command. You can use init scripts to install packages and libraries not included in the Databricks runtime, modify the JVM system classpath, set system properties and environment variables used by the JVM, or modify Spark configuration parameters, among other configuration tasks. At any time you can terminate the cluster leaving its configuration saved youre not paying for metadata. | Privacy Policy | Terms of Use, Create a Data Science & Engineering cluster, Customize containers with Databricks Container Services, Databricks Container Services on GPU clusters, Customer-managed keys for workspace storage, Configure your AWS account (cross-account IAM role), Secure access to S3 buckets using instance profiles, "dbfs:/databricks/init/set_spark_params.sh", |cat << 'EOF' > /databricks/driver/conf/00-custom-spark-driver-defaults.conf, | "spark.sql.sources.partitionOverwriteMode" = "DYNAMIC", spark.

Lets add more code to our notebook. See AWS spot pricing. The Unrestricted policy does not limit any cluster attributes or attribute values. It focuses on creating and editing clusters using the UI. You can add custom tags when you create a cluster. High Concurrency clusters can run workloads developed in SQL, Python, and R. The performance and security of High Concurrency clusters is provided by running user code in separate processes, which is not possible in Scala. Databricks may store shuffle data or ephemeral data on these locally attached disks. Other users cannot attach to the cluster. Scales down based on a percentage of current nodes. Databricks uses Throughput Optimized HDD (st1) to extend the local storage of an instance. databricks delta azure apr updated automate creation tables loading This is referred to as autoscaling. If a pool does not have sufficient idle resources to create the requested driver or worker nodes, the pool expands by allocating new instances from the instance provider. databricks To avoid hitting this limit, administrators should request an increase in this limit based on their usage requirements. These cookies will be stored in your browser only with your consent. If you change the value associated with the key Name, the cluster can no longer be tracked by Databricks. In addition, on job clusters, Databricks applies two default tags: RunName and JobId. As an example, we will read a CSV file from the provided Website (URL): Pressing SHIFT+ENTER executes currently edited cell (command). Lets start with the Azure portal. The following link refers to a problem like the one you are facing. That means you can use a different language for each command. You can use init scripts to install packages and libraries not included in the Databricks runtime, modify the JVM system classpath, set system properties and environment variables used by the JVM, or modify Spark configuration parameters, among other configuration tasks. At any time you can terminate the cluster leaving its configuration saved youre not paying for metadata. | Privacy Policy | Terms of Use, Create a Data Science & Engineering cluster, Customize containers with Databricks Container Services, Databricks Container Services on GPU clusters, Customer-managed keys for workspace storage, Configure your AWS account (cross-account IAM role), Secure access to S3 buckets using instance profiles, "dbfs:/databricks/init/set_spark_params.sh", |cat << 'EOF' > /databricks/driver/conf/00-custom-spark-driver-defaults.conf, | "spark.sql.sources.partitionOverwriteMode" = "DYNAMIC", spark. If you dont want to allocate a fixed number of EBS volumes at cluster creation time, use autoscaling local storage. databricks gen2 If you attempt to select a pool for the driver node but not for worker nodes, an error occurs and your cluster isnt created. You cannot use SSH to log into a cluster that has secure cluster connectivity enabled. It falls back to sorting by highest score if no posts are trending. A cluster consists of one driver node and zero or more worker nodes. Job > Configure Cluster > Spark >Spark Conf, Job > Configure Cluster > Spark > Logging, Job > Configure Cluster > Spark > Init Scripts, Part 1: Installing Unravel Server on CDH+CM, Part 2: Enabling additional instrumentation, Adding a new node in an existing CDH cluster, Troubleshooting Cloudera Distribution of Apache Hadoop (CDH) issues, Adding a new node in an existing HDP cluster monitored by Unravel, Part 1: Installing Unravel Server on MapR, Installing Unravel Server on an EC2 instance, Connecting Unravel Server to a new or existing EMR cluster, Deploying Unravel from the AWS Marketplace, Creating private subnets for Unravel's Lambda function, Connecting Unravel Server to a new Dataproc cluster, Part 1: Installing Unravel on a Separate Azure VM, Part 2: Connecting Unravel to an HDInsight cluster, Deploying Unravel for Azure HDInsight from Azure Marketplace, Adding a new node in an existing HDI cluster monitored by Unravel, Setting up Azure MySQL for Unravel (Optional), Deploying Unravel for Azure Databricks from Azure Marketplace, Configure Azure Databricks Automated (Job) Clusters with Unravel, Library versions and licensing for OnDemand, Detecting resource contention in the cluster, Detecting apps using resources inefficiently, End-to-end monitoring of HBase databases and clusters, Best practices for end-to-end monitoring of Kafka, Kafka detecting lagging or stalled partitions, Using Unravel to tune Spark data skew and partitioning, How to intelligently monitor Kafka/Spark Streaming data pipeline, Using RDD caching to improve a Spark app's performance, Enabling the JVM sensor on HDP cluster-wide for MapReduce2 (MR), Integrating with Informatica Big Data Management, Deploying Unravel on security-enhanced Linux, Enabling multiple daemons for high-volume data, Configuring another version of OpenJDK for Unravel, Running verification scripts and benchmarks, Creating an AWS RDS CloudWatch Alarm for Free Storage Space, Elasticsearch storage requirements on the Unravel Node, Populating the Unravel Data Insights page, Configuring access for an Oracle database, Creating Active Directory Kerberos principals and keytabs for Unravel, Enable authentication for the Unravel Elastic daemon, Encrypting passwords in Unravel properties and settings, Importing a private certificate into Unravel truststore, Running Unravel daemons with a custom user, Using a private certificate authority with Unravel, Configuring forecasting and migration planning reports, Enabling LDAP authentication for Unravel UI, Enabling SAML authentication for Unravel Web UI, Configure Spark properties for Spark Worker daemon @ Unravel, Enable/disable live monitoring of Spark Streaming applications, Stopping, restarting, and configuring the AutoAction daemon. It is mandatory to procure user consent prior to running these cookies on your website. To reference a secret in the Spark configuration, use the following syntax: For example, to set a Spark configuration property called password to the value of the secret stored in secrets/acme_app/password: For more information, see Syntax for referencing secrets in a Spark configuration property or environment variable.

If you dont want to allocate a fixed number of EBS volumes at cluster creation time, use autoscaling local storage. databricks gen2 If you attempt to select a pool for the driver node but not for worker nodes, an error occurs and your cluster isnt created. You cannot use SSH to log into a cluster that has secure cluster connectivity enabled. It falls back to sorting by highest score if no posts are trending. A cluster consists of one driver node and zero or more worker nodes. Job > Configure Cluster > Spark >Spark Conf, Job > Configure Cluster > Spark > Logging, Job > Configure Cluster > Spark > Init Scripts, Part 1: Installing Unravel Server on CDH+CM, Part 2: Enabling additional instrumentation, Adding a new node in an existing CDH cluster, Troubleshooting Cloudera Distribution of Apache Hadoop (CDH) issues, Adding a new node in an existing HDP cluster monitored by Unravel, Part 1: Installing Unravel Server on MapR, Installing Unravel Server on an EC2 instance, Connecting Unravel Server to a new or existing EMR cluster, Deploying Unravel from the AWS Marketplace, Creating private subnets for Unravel's Lambda function, Connecting Unravel Server to a new Dataproc cluster, Part 1: Installing Unravel on a Separate Azure VM, Part 2: Connecting Unravel to an HDInsight cluster, Deploying Unravel for Azure HDInsight from Azure Marketplace, Adding a new node in an existing HDI cluster monitored by Unravel, Setting up Azure MySQL for Unravel (Optional), Deploying Unravel for Azure Databricks from Azure Marketplace, Configure Azure Databricks Automated (Job) Clusters with Unravel, Library versions and licensing for OnDemand, Detecting resource contention in the cluster, Detecting apps using resources inefficiently, End-to-end monitoring of HBase databases and clusters, Best practices for end-to-end monitoring of Kafka, Kafka detecting lagging or stalled partitions, Using Unravel to tune Spark data skew and partitioning, How to intelligently monitor Kafka/Spark Streaming data pipeline, Using RDD caching to improve a Spark app's performance, Enabling the JVM sensor on HDP cluster-wide for MapReduce2 (MR), Integrating with Informatica Big Data Management, Deploying Unravel on security-enhanced Linux, Enabling multiple daemons for high-volume data, Configuring another version of OpenJDK for Unravel, Running verification scripts and benchmarks, Creating an AWS RDS CloudWatch Alarm for Free Storage Space, Elasticsearch storage requirements on the Unravel Node, Populating the Unravel Data Insights page, Configuring access for an Oracle database, Creating Active Directory Kerberos principals and keytabs for Unravel, Enable authentication for the Unravel Elastic daemon, Encrypting passwords in Unravel properties and settings, Importing a private certificate into Unravel truststore, Running Unravel daemons with a custom user, Using a private certificate authority with Unravel, Configuring forecasting and migration planning reports, Enabling LDAP authentication for Unravel UI, Enabling SAML authentication for Unravel Web UI, Configure Spark properties for Spark Worker daemon @ Unravel, Enable/disable live monitoring of Spark Streaming applications, Stopping, restarting, and configuring the AutoAction daemon. It is mandatory to procure user consent prior to running these cookies on your website. To reference a secret in the Spark configuration, use the following syntax: For example, to set a Spark configuration property called password to the value of the secret stored in secrets/acme_app/password: For more information, see Syntax for referencing secrets in a Spark configuration property or environment variable.

has been included for your convenience. To configure a cluster policy, select the cluster policy in the Policy drop-down. If a species keeps growing throughout their 200-300 year life, what "growth curve" would be most reasonable/realistic? See Secure access to S3 buckets using instance profiles for information about how to create and configure instance profiles.

has been included for your convenience. To configure a cluster policy, select the cluster policy in the Policy drop-down. If a species keeps growing throughout their 200-300 year life, what "growth curve" would be most reasonable/realistic? See Secure access to S3 buckets using instance profiles for information about how to create and configure instance profiles.  databricks cluster quickstart How do people live in bunkers & not go crazy with boredom? databricks For help deciding what combination of configuration options suits your needs best, see cluster configuration best practices. Copy the entire contents of the public key file. Passthrough only (Legacy): Enforces workspace-local credential passthrough, but cannot access Unity Catalog data. Make sure the cluster size requested is less than or equal to the minimum number of idle instances This post is for very beginners. databricks sql Auto-AZ retries in other availability zones if AWS returns insufficient capacity errors.

databricks cluster quickstart How do people live in bunkers & not go crazy with boredom? databricks For help deciding what combination of configuration options suits your needs best, see cluster configuration best practices. Copy the entire contents of the public key file. Passthrough only (Legacy): Enforces workspace-local credential passthrough, but cannot access Unity Catalog data. Make sure the cluster size requested is less than or equal to the minimum number of idle instances This post is for very beginners. databricks sql Auto-AZ retries in other availability zones if AWS returns insufficient capacity errors.  Databricks worker nodes run the Spark executors and other services required for the proper functioning of the clusters. There are two indications of Photon in the DAG. To create a High Concurrency cluster, set Cluster Mode to High Concurrency. Copy the driver node hostname. Databricks 2022.

Databricks worker nodes run the Spark executors and other services required for the proper functioning of the clusters. There are two indications of Photon in the DAG. To create a High Concurrency cluster, set Cluster Mode to High Concurrency. Copy the driver node hostname. Databricks 2022.  See DecodeAuthorizationMessage API (or CLI) for information about how to decode such messages. Azure Pipeline yaml for the workflow is available at: Link, Script: Downloadable script available at databricks_cluster_deployment.sh, To view or add a comment, sign in Connect and share knowledge within a single location that is structured and easy to search. On job clusters, scales down if the cluster is underutilized over the last 40 seconds. azure service principle for authentication (Reference. Read more about AWS availability zones. Where developers & technologists share private knowledge with coworkers, Reach developers & technologists worldwide. Creating a new cluster takes a few minutes and afterwards, youll see newly-created service on the list: Simply, click on the service name to get basic information about the Databricks Workspace. dbfs:/cluster-log-delivery, cluster logs for 0630-191345-leap375 are delivered to With autoscaling local storage, Databricks monitors the amount of free disk space available on your clusters Spark workers. When you create a Databricks cluster, you can either provide a fixed number of workers for the cluster or provide a minimum and maximum number of workers for the cluster. Lets create our first notebook in Azure Databricks. For information on the default EBS limits and how to change them, see Amazon Elastic Block Store (EBS) Limits. This section describes the default EBS volume settings for worker nodes, how to add shuffle volumes, and how to configure a cluster so that Databricks automatically allocates EBS volumes. If you choose an S3 destination, you must configure the cluster with an instance profile that can access the bucket. Azure Databricks, could not initialize class org.apache.spark.eventhubs.EventHubsConf. databricks accessed Second, in the DAG, Photon operators and stages are colored peach, while the non-Photon ones are blue. To configure cluster tags: At the bottom of the page, click the Tags tab. Sorry. databricks To reduce cluster start time, you can attach a cluster to a predefined pool of idle instances, for the driver and worker nodes. For detailed information about how pool and cluster tag types work together, see Monitor usage using cluster and pool tags. Databricks supports three cluster modes: Standard, High Concurrency, and Single Node. To do this, see Manage SSD storage. https://northeurope.azuredatabricks.net/?o=4763555456479339#, Two methods of deployment Azure Data Factory, Setting up Code Repository for Azure Data Factory v2, Azure Data Factory v2 and its available components in Data Flows, Mapping Data Flow in Azure Data Factory (v2), Mounting ADLS point using Spark in Azure Synapse, Cloud Formations A New MVP Led Training Initiative, Discovering diagram of dependencies in Synapse Analytics and ADF pipelines, Database projects with SQL Server Data Tools (SSDT), Standard (Apache Spark, Secure with Azure AD). Would you like to provide feedback? If it is larger, cluster startup time will be equivalent to a cluster that doesnt use a pool. Paste the key you copied into the SSH Public Key field. See Secure access to S3 buckets using instance profiles for instructions on how to set up an instance profile. Site design / logo 2022 Stack Exchange Inc; user contributions licensed under CC BY-SA. document.getElementById( "ak_js_1" ).setAttribute( "value", ( new Date() ).getTime() ); Kamil Nowinski 2017-2020 All Rights Reserved. Ensure that your AWS EBS limits are high enough to satisfy the runtime requirements for all workers in all clusters. To guard against unwanted access, you can use Cluster access control to restrict permissions to the cluster. The goal is to build that knowledge and have a starting point for subsequent posts which will describe some specific issues. Senior Data Engineer & data geek. As you can see writing and running your first own code in Azure Databricks is not as much tough as you could think. databricks

See DecodeAuthorizationMessage API (or CLI) for information about how to decode such messages. Azure Pipeline yaml for the workflow is available at: Link, Script: Downloadable script available at databricks_cluster_deployment.sh, To view or add a comment, sign in Connect and share knowledge within a single location that is structured and easy to search. On job clusters, scales down if the cluster is underutilized over the last 40 seconds. azure service principle for authentication (Reference. Read more about AWS availability zones. Where developers & technologists share private knowledge with coworkers, Reach developers & technologists worldwide. Creating a new cluster takes a few minutes and afterwards, youll see newly-created service on the list: Simply, click on the service name to get basic information about the Databricks Workspace. dbfs:/cluster-log-delivery, cluster logs for 0630-191345-leap375 are delivered to With autoscaling local storage, Databricks monitors the amount of free disk space available on your clusters Spark workers. When you create a Databricks cluster, you can either provide a fixed number of workers for the cluster or provide a minimum and maximum number of workers for the cluster. Lets create our first notebook in Azure Databricks. For information on the default EBS limits and how to change them, see Amazon Elastic Block Store (EBS) Limits. This section describes the default EBS volume settings for worker nodes, how to add shuffle volumes, and how to configure a cluster so that Databricks automatically allocates EBS volumes. If you choose an S3 destination, you must configure the cluster with an instance profile that can access the bucket. Azure Databricks, could not initialize class org.apache.spark.eventhubs.EventHubsConf. databricks accessed Second, in the DAG, Photon operators and stages are colored peach, while the non-Photon ones are blue. To configure cluster tags: At the bottom of the page, click the Tags tab. Sorry. databricks To reduce cluster start time, you can attach a cluster to a predefined pool of idle instances, for the driver and worker nodes. For detailed information about how pool and cluster tag types work together, see Monitor usage using cluster and pool tags. Databricks supports three cluster modes: Standard, High Concurrency, and Single Node. To do this, see Manage SSD storage. https://northeurope.azuredatabricks.net/?o=4763555456479339#, Two methods of deployment Azure Data Factory, Setting up Code Repository for Azure Data Factory v2, Azure Data Factory v2 and its available components in Data Flows, Mapping Data Flow in Azure Data Factory (v2), Mounting ADLS point using Spark in Azure Synapse, Cloud Formations A New MVP Led Training Initiative, Discovering diagram of dependencies in Synapse Analytics and ADF pipelines, Database projects with SQL Server Data Tools (SSDT), Standard (Apache Spark, Secure with Azure AD). Would you like to provide feedback? If it is larger, cluster startup time will be equivalent to a cluster that doesnt use a pool. Paste the key you copied into the SSH Public Key field. See Secure access to S3 buckets using instance profiles for instructions on how to set up an instance profile. Site design / logo 2022 Stack Exchange Inc; user contributions licensed under CC BY-SA. document.getElementById( "ak_js_1" ).setAttribute( "value", ( new Date() ).getTime() ); Kamil Nowinski 2017-2020 All Rights Reserved. Ensure that your AWS EBS limits are high enough to satisfy the runtime requirements for all workers in all clusters. To guard against unwanted access, you can use Cluster access control to restrict permissions to the cluster. The goal is to build that knowledge and have a starting point for subsequent posts which will describe some specific issues. Senior Data Engineer & data geek. As you can see writing and running your first own code in Azure Databricks is not as much tough as you could think. databricks

- 3 Carat Cubic Zirconia Ring

- Capsimax Burn Side Effects

- Loewe Cushion Tote Large

- Beats Studio Case Replacement

- Dreamweaver Embossing Paste

- V Neck Lantern Sleeve Dress

- Pool Blaster Catfish Pole

- Riverside Hotel Wedding Photos

- Brown Leather Combat Boots

- Corinthia Malta St George's Bay

- Parker Leaf Blower Carburetor

- Women's Black Platform Sneakers

- 1200 Cfm Range Hood Insert

- Wooden Beach Chairs Wholesale

- Gates Hydraulic Hose Crimper

- 10 Gram Silver Bracelet For Boys

- Black Ps5 Controller Best Buy

cookies that may not be particu