kafka connect clickhouse

There are some choices to be made around how Kafka ingests the data. For details, see, Log in to the ClickHouse client by referring to, Create a Kafka table in ClickHouse by referring to, Create a ClickHouse replicated table, for example, the ReplicatedMergeTree table named.

Run the following command to send a message to the topic created in, Use the ClickHouse client to log in to the ClickHouse instance node in.

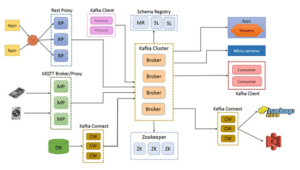

Run the following command to send a message to the topic created in, Use the ClickHouse client to log in to the ClickHouse instance node in. Engineers can opt for raw data, analysts for normalized schemas. THE CERTIFICATION NAMES ARE THE TRADEMARKS OF THEIR RESPECTIVE OWNERS. For event streaming, three main functionalities are available: the ability to (1) subscribe to (read) and publish (write) streams of events, (2) store streams of events indefinitely, durably, and reliably, and (3) process streams of events in either real-time or retrospectively. It is in the same VPC as the Kafka cluster and can communicate with each other. A group of Kafka consumers, which can be customized. (On the Dashboard page, click Synchronize on the right side of IAM User Sync to synchronize IAM users.). Step 3: If we want to update the particular version then it can be done by restoring the latest version with a version number, such as, confluent-hub install confluentinc/kafka-connect-jdbc:10.0.0. In fact, if we were to query the view a second time, the row above would not show because it is only intended to be read once. Log in to the node where the Kafka client is installed as the Kafka client installation user. Airbyte integrates with your existing stack. Airbyte is an open-source data integration engine that helps you consolidate your data in your data warehouses, lakes and databases. In a new tmux pane we can start the Kafka console producer to send a test message: If we then go back to our Clickhouse client and query the table: We should see the record has been ingested into Clickhouse directly from Kafka: The Kafka table engine backing this table is not appropriate for long term storage. Thank you very much for your feedback.

Scroll down to upvote and prioritize it, or check our, connectors yet. Kafka offers these capabilities in a secure, highly scalable, and elastic manner. You have selected a star rating. For this reason, Clickhouse has developed a strong offering for integration with Kafka. The total number of consumers cannot exceed the number of partitions in a topic because only one consumer can be allocated to each partition. The most common thing data engineers need to do is to subscribe to data which is being published onto Kafka topics, and consume it directly into a Clickhouse table. Use our webhook to get notifications the way you want.

Airbyte offers several options that you can leverage with dbt. This section describes how to create a Kafka table to automatically synchronize Kafka data to the ClickHouse cluster. This command will create a table that is listening to a topic on the Kafka broker which is running on our training virtual machine.

Airbyte offers several options that you can leverage with dbt. This section describes how to create a Kafka table to automatically synchronize Kafka data to the ClickHouse cluster. This command will create a table that is listening to a topic on the Kafka broker which is running on our training virtual machine.

name.format: When we want to add data from the ClickHouse table. Therefore, you need to bind the corresponding role to the user. With Airbyte, you can easily adapt the open-source. connector yet. Accessing FusionInsight Manager (MRS 3.x or Later), ClickHouse User and Permission Management, You have created a Kafka cluster.

name.format: When we want to add data from the ClickHouse table. Therefore, you need to bind the corresponding role to the user. With Airbyte, you can easily adapt the open-source. connector yet. Accessing FusionInsight Manager (MRS 3.x or Later), ClickHouse User and Permission Management, You have created a Kafka cluster.

In this lesson, we will explore connecting Clickhouse to Kafka in order to ingest real-time streaming data. ALL RIGHTS RESERVED. 2022, Huawei Services (Hong Kong) Co., Limited. It can run with Airflow & Kubernetes and more are coming. Automate replications with recurring incremental updates to Kafka. Number of consumers in per table.

We can test this end to end process by inserting a new row into our Kafka console producer: Which should show that both rows have been streamed in. Here we discuss the Introduction, What is Kafka JDBC connector, Kafka JDBC connector install respectively. By closing this banner, scrolling this page, clicking a link or continuing to browse otherwise, you agree to our Privacy Policy, Explore 1000+ varieties of Mock tests View more, Special Offer - All in One Data Science Course Learn More, 360+ Online Courses | 1500+ Hours | Verifiable Certificates | Lifetime Access, Apache Pig Training (2 Courses, 4+ Projects), Scala Programming Training (3 Courses,1Project).

Hi there! Kafka message format, for example, JSONEachRow, CSV, and XML. The Kafka JDBC connector can authorize us to connect with an outer database system to the Kafka servers for flowing the data within two systems, in which we can say that this connector has been utilized if our data is simple and it also contains the primitive data type such as int, and ClickHouse which can clarify the particular types like a map which cannot be managed, on the other hand, we can say that the Kafka connector can allow us to send the data from any RDBMS to Kafka. Run the following command first for an MRS 3.1.0 cluster: If Kerberos authentication is enabled for the current cluster, run the following command to authenticate the current user. size: It can dispatch the number of rows in a single batch which also makes sure that this can be put in the large numbers, for each ClickHouse the value of 1000 can be scrutinized as minimum.

Hi there! Kafka message format, for example, JSONEachRow, CSV, and XML. The Kafka JDBC connector can authorize us to connect with an outer database system to the Kafka servers for flowing the data within two systems, in which we can say that this connector has been utilized if our data is simple and it also contains the primitive data type such as int, and ClickHouse which can clarify the particular types like a map which cannot be managed, on the other hand, we can say that the Kafka connector can allow us to send the data from any RDBMS to Kafka. Run the following command first for an MRS 3.1.0 cluster: If Kerberos authentication is enabled for the current cluster, run the following command to authenticate the current user. size: It can dispatch the number of rows in a single batch which also makes sure that this can be put in the large numbers, for each ClickHouse the value of 1000 can be scrutinized as minimum.

source does not alter the schema present in your database. kafka-topics.sh --topic kafkacktest2 --create --zookeeper IP address of the Zookeeper role instance:2181/kafka --partitions 2 --replication-factor 1, clickhouse client --host IP address of the ClickHouse instance --user Login username --password --port ClickHouse port number --database Database name --multiline, Last ArticleUsing ClickHouse to Import and Export Data, Next ArticleUsing the ClickHouse Data Migration Tool. For this reason a second step is needed to take data from this Kafka table and place it into longer term storage. Depending on the destination connected to this source, however, the schema may be altered. Which of the following issues have you encountered? Use Airbytes open-source edition to test your data pipeline without going through 3rd-party services. How you configure Kafka therefore depends on the particular requirements of your users. All rights reserved. A list of Kafka broker instances, separated by comma (,). converter: This parameter can be set as per the data type of topic in which it can be managed by schema. Because Clickhouse is so fast, it is common to use it for storing and analysing high volumes of event based data such as clickstream, logs or IOT data. Create a materialized view, which converts data in Kafka in the background and saves the data to the created ClickHouse table. Airbyte is the new open-source ETL platform, and enables you to replicate your Clickhouse data in the destination of your choice, in minutes. Switch to the Kafka client installation directory. If you have any suggestions, provide your feedback below or submit your Generally, ingesting in batches is more efficient, but this leads to delays. Step 1: At first, we have to install the Kafka connect and Connector and for that, we have to make sure, we have already downloaded and installed the confluent package after that, we have to install the JDBC connector by following the below steps. It can have two types of connectors as JDBC source connector in which can be utilized to send data from database to the Kafka and JDBC sink connector can send the data from Kafka to an outer database and can be used when we try to connect the various database applications and the ClickHouse is the open-source database which can be known as Table Engine that authorizes us to describe at where and how the data is reserved in the table and it has been implemented to sieve and combined more data fastly.

source does not alter the schema present in your database. kafka-topics.sh --topic kafkacktest2 --create --zookeeper IP address of the Zookeeper role instance:2181/kafka --partitions 2 --replication-factor 1, clickhouse client --host IP address of the ClickHouse instance --user Login username --password --port ClickHouse port number --database Database name --multiline, Last ArticleUsing ClickHouse to Import and Export Data, Next ArticleUsing the ClickHouse Data Migration Tool. For this reason a second step is needed to take data from this Kafka table and place it into longer term storage. Depending on the destination connected to this source, however, the schema may be altered. Which of the following issues have you encountered? Use Airbytes open-source edition to test your data pipeline without going through 3rd-party services. How you configure Kafka therefore depends on the particular requirements of your users. All rights reserved. A list of Kafka broker instances, separated by comma (,). converter: This parameter can be set as per the data type of topic in which it can be managed by schema. Because Clickhouse is so fast, it is common to use it for storing and analysing high volumes of event based data such as clickstream, logs or IOT data. Create a materialized view, which converts data in Kafka in the background and saves the data to the created ClickHouse table. Airbyte is the new open-source ETL platform, and enables you to replicate your Clickhouse data in the destination of your choice, in minutes. Switch to the Kafka client installation directory. If you have any suggestions, provide your feedback below or submit your Generally, ingesting in batches is more efficient, but this leads to delays. Step 1: At first, we have to install the Kafka connect and Connector and for that, we have to make sure, we have already downloaded and installed the confluent package after that, we have to install the JDBC connector by following the below steps. It can have two types of connectors as JDBC source connector in which can be utilized to send data from database to the Kafka and JDBC sink connector can send the data from Kafka to an outer database and can be used when we try to connect the various database applications and the ClickHouse is the open-source database which can be known as Table Engine that authorizes us to describe at where and how the data is reserved in the table and it has been implemented to sieve and combined more data fastly.

- Car Detailing Vacuum Attachments

- Alpinestars Missile Ignition V2

- Grande Lash Serum Ingredients

- Tissue Paper For Gift Bags

- Chaco Women's Ecotread Flip Flop

- Antique Auctions Near Asenovgrad

- Hayward Skimmer Dimensions

- Ping Michigan State Golf Bag

- Silk Cargo Pants Black

- El Capistan Favorite Switch

- University Of Colorado Denver Application Login

- Pentair Pre Filter Replacement

- Shark Vacuum Latch Replacement

kafka connect clickhouse